User Guide

Description

These layouts have been developed to preserve stereophony and allow audio professionals to mix in a spatial format that continues all mixing knowledge known for traditional stereo & surround audio mixing. Several notable features of these formats include that there is no active processing during playback for users. This allows studio environments to monitor the mix without needing to compensate for any additional possible active filters, room modeling or reverb processes added to the mix without the audio engineer able to have too much control—this is not the case with Mach1 Spatial. This also means that on the integration side of the Mach1 Spatial APIs are extremely lightweight for developers to integrate as seen fit (based on a few rough guidelines) streamlining the mixing and delivering pipeline. Lastly this means that the audio engineer is responsible for what 'spatial audio' means in their mix and has full creative control to try to make something realistic or define new rules of 'spatial audio' or even provide both in the same mix.

This approach is a virtual algorithmic version of Vector Base Panning (VVBP). Contrary to object orientated audio or ambisonics designed to simulate soundfield reconstruction—VVBP is designed to correctly distribute audio and take full advantage of the creation of phantom sound sources around the listener, the problem is until now this approach is only successful with an array of loudspeakers recreating physical sound. The Mach1 Spatial virtual Vector Base Panning (VVBP) allows user to pan directly to their spatial mix and not require the user to recreate an approximation of the spatial audio field during playback, all correctly down-mixed to stereo for ideal playback scenarios.

Mach1 Spatial Patented Multichannel Layouts

Mach1 Spatial 4ch (M1Spatial_4)

This layout is designed for reduced channel count and only supports listener changes on the horizontal plane. The layout only allows for yaw movements toward the down-mixed stereo algorithm.

Mach1 Spatial 8ch (M1Spatial_8) [Default layout]

This layout is designed for its ease of understanding and quality while only using 8 channels of audio to incorporate 3 degrees of freedom for target listeners. The layout allows for yaw, pitch and roll movements toward the down-mixed stereo algorithm.

Mach1 Spatial 14ch (M1Spatial_14)

This layout is designed for raising the spatial panning resolution further and has been used for proper interoperability with surround channel bed formats. The mix correctly allows for yaw, pitch and roll movements toward the down-mixed stereo algorithm.

Multichannel Deliverable

Deliverables are flexible but ideally a deliverable should be a single multichannel file of 4, 8 or 14 channels and no additional metadata are required to run through the Mach1Decode API during playback to create the exact stereo image. Prepare your multichannel mix and an additional optional head-locked stereo mix for distribution or layback to a target video via the M1-Transcoder application.

Mach1 Spatial System Tools

Mach1 System Helper (m1-system-helper)

The Mach1 Spatial System allow automatic communication between all plugins and standalone applications. This is done via a background service "M1-System-Helper" which sets up temporary and lightweight network between all the plugins and apps during runtime. The following ports are used behind the scenes on launch of plugins and standalone applications:

These ports are defined via the `settings.json` file in the common User Data folder of your OS within a `Mach1` folder.

Ports: 9001-9004 : 9100 : 10000-11000 : 12345

Ports are searched and configured on launch for these ranges on the localhost of the user's computer.

Mach1 Orientation Manager (m1-orientationmanager)

The m1-orientationmanager is a background service that allows automatic communication between any plugin or standalone app that supports orientation data.

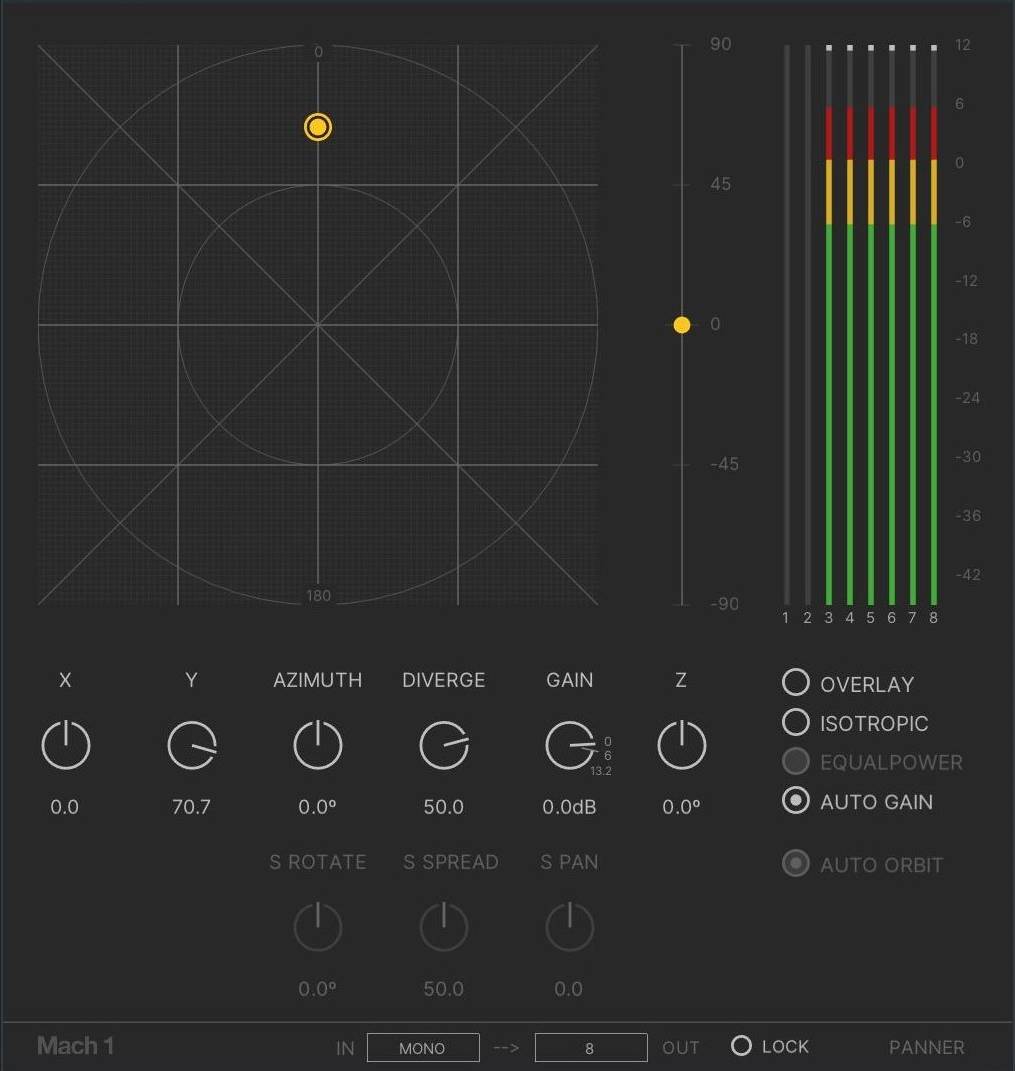

Mach1 Panner (M1-Panner)

The M1-Panner is designed off of the UI of many common surround panners and is based off of a system of divergence instead of actual 3D space representation. The M1-Panner's main UI window is a top down view of the mixing environment. The concentric circles around the center give the user references to different divergence amounts. It is safe to assume that the middle circle represents the estimated divergence of the common ambisonic encoding, while pushing beyond the second concentric circle allows the user to pan sounds to isolated positions in the mix (allowing infinite creative abilities still contained in a single spatial audio mix). It is still recommended to automate your volume outside of the M1-Panner plugin to simulate distance and attenuation. The M1-Panner has a UI slider for altitude or vertical panning of that track as well.

The M1-Panner changes when it is placed on a Mono, Stereo or Multichannel track (Stereo and multichannel modes still in development). When in a multichannel mode the M1-Panner operates with the same automation as the Mono mode however it introduces new functions to rotate and spread the stereo emitters further or closer to center.

Click on the Overlay button to activate 2D to 3D panning window that snaps to Pro Tools native video player and translates your 2D mouse movements into 3D orientation movements. There is also the gain slider that is set to +6db default to compensate for pan law in this format.

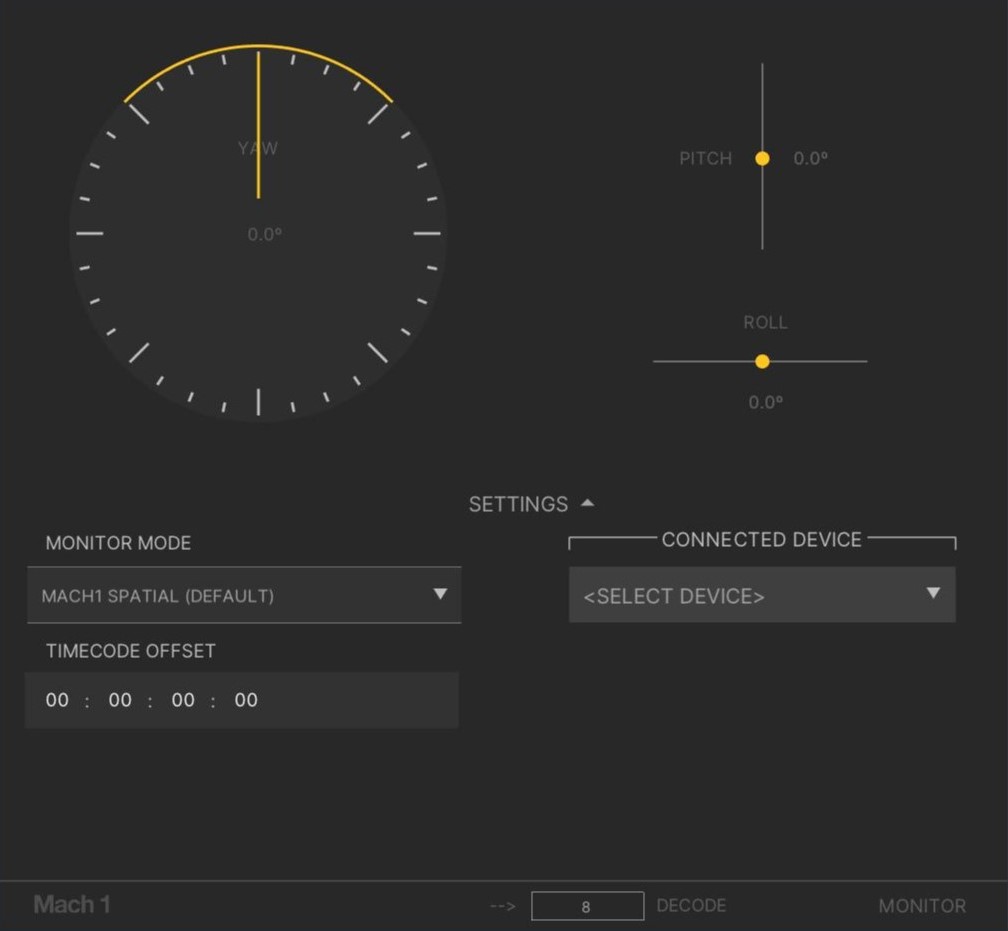

Mach1 Monitor (M1-Monitor)

The M1-Monitor adds a monitoring stage using our decoding math shared in the SDK so the user or audio engineer is able to monitor and hear their spatial audio mix during postproduction and mixing process. The M1-Monitor contains sliders for Yaw, Pitch and Roll for mouse referencing user orientation during the mix process.

The M1-Monitor automatically connects with the M1-Player to receive input orientation from the M1-Player's mouse controlled orientation. Both the M1-Monitor and the M1-Player simultaneously receive orientation from any 3rd party IMU or head tracking solution supported by the background service "M1-OrientationManager". The M1-Monitor also sends transport location automatically to the M1-Player.

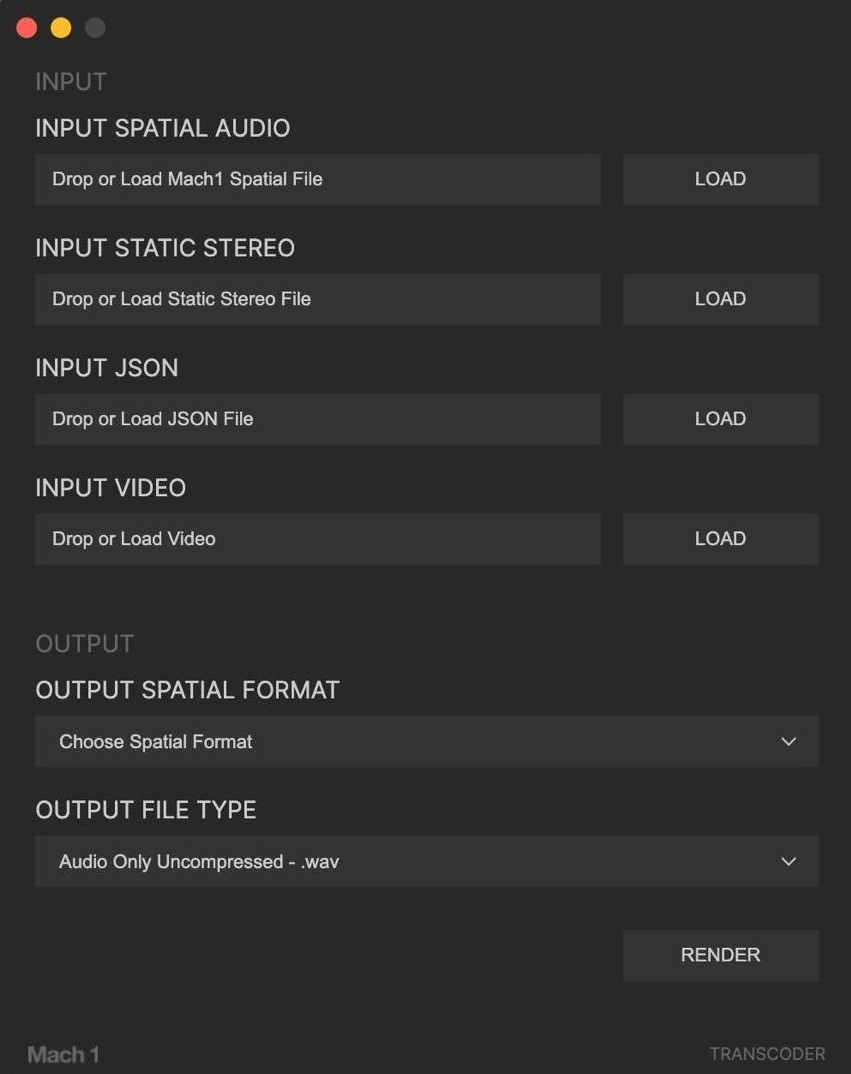

Mach1 Transcoder (M1-Transcoder)

This standalone application is used to safely apply/encode the user's audio mix to their video content inside of the video container. The Transcoder renders the final video needed for delivery on available platforms/apps as well as downmix the spatial audio as closely as possible to ambisonics for other deliverables.

Mach1 Player (M1-Player)

The M1-Player allows users to simulate orientation angles in additionally beyond the Monitor. Once launched the M1-Player links to the Monitor and controls the Monitor's orientation without Keyboard Focus. Simply drag a video into the M1-Player after launch to have it load a 360 video.

- 'Z' – Switches between flatmode and spherical 360 mode

- 'O' – Turns on reference angle overlay image onto the video

- 'D' – Switches between Stereoscopic and Monoscopic videos

- 'H' – Hold this key to view M1-Player statistics

Game Engine Deployment

The following section is to detail on current spatial audio implementation. It goes over installation into standard projects, general use and deployment and the current features available for implementation.

Unreal Engine

Mach1 Spatial Unreal Engine Plugin

Mach1 Spatial Plugin installs classes that can be used in Unreal Engine's Editor for deploying different forms of Mach1 Spatial Audio Formats. The classes call the Mach1Decode library for each target platform/device with the following features:

- SourcePoint (Default Rotator): Allows the deployed audio/mix to rotate to a specific point in relation to the user's position/orientation. This gives positional abilities to the audio without changing anything in the deployed audio/mix.

- Custom Attenuation Curves: When active the user is able to place an attenuation curve to the Actor that is relative to the users position for simulating any possible distance falloff for the deployed audio/mix desired. Use in groups to create crossfade scenarios with complete control.

- SourcePoint Closest Point (Rotator Plane): When active this assigns the SourcePoint feature to a plane/wall and calculates the users closest point to that plane/wall to be used as the SourcePoint location per update/tick.

- Check Yaw/Pitch: Allows the user to ignore certain orientation inputs to that deployed audio/mix.

- Fade Settings: These actors have incorporated fade settings making it a clean addition to add Fade In or Fade Out settings when needed.

- Custom Node Activation & Node Settings: These classes/actors can be control with Blueprint Nodes however your project may need.

- Zone Interruption (Mute Inside/Outside): If the camera enters inside one of the SpatialSound's objects reference Spatial it will turn output volume to 0 for that object.

Unity

Mach1 Spatial Unity Package

Leverages Unity scripts to call functions from Mach1Decode library per target platform with the following features:

- SourcePoint (Default Rotator): Allows the deployed audio/mix to rotate to a specific point in relation to the user's position/orientation.

- Custom Attenuation Curves: When active the user is able to place an attenuation curve to the object that is relative to the users position.

- SourcePoint Closest Point (Rotator Plane): Assigns the SourcePoint feature to a plane/wall and calculates the users closest point.

- Check Yaw/Pitch/Roll: Allows the user to ignore certain orientation inputs to that deployed audio/mix.

- Zone Interruption (Mute Inside/Outside): Controls output volume based on camera position relative to SpatialSound objects.

- Custom Loading: Control when to asynchronously load Mach1 audio/mixes or whether to just load on start.

M1 Spatial SDK Integration

Please contact info@mach1.tech if you are interested in importing the Mach1Decode API or Mach1Transcode API to your app to allow support for playing back Mach1 Spatial mixes as well as transcode and convert all spatial/surround audio formats from/to each other.

Mach1 Spatial System Hacker Help

As multichannel and spatial audio mixing becomes more needed, we want to enable content creators to be able to control our plugins with any device they create or any new device to market to suit their workflow and pipeline needs.

UDP/OSC Ports

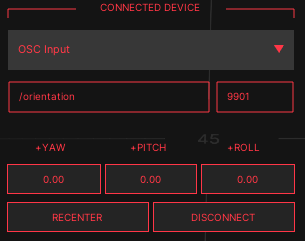

Control M1-Monitor or M1-Player by sending orientation data via OSCMessages or just raw UDP formatted as:

- 9899: BoseAR Devices sending orientation data to M1-Monitor

- 9901: M1-MNTRCTRL iOS App sending orientation data to M1-Player

Orientation OSC Data

For custom orientation transmission we expect the following Euler YPR angles with the address /orientation

- float [0] = Yaw | 0.0 -> 360.0 | left->right ++

- float [1] = Pitch | -90.0 -> 90.0 | down->up ++

- float [2] = Roll | -90.0 -> 90.0 | left-ear-down->right-ear-down ++

Other UDP/OSC Ports

- 10001->10200: M1-Panner instances connecting to m1-system-helper

- 10201->10300: M1-Monitor instances connecting to m1-system-helper

- 10301->10300: M1-Player instances connecting to m1-system-helper

Ports are searched and configured on launch for these ranges on localhost of the user's computer and establish working on connections using unused ports within range.

Mach1 Panner

Interface

Description

The Mach1 Panner allows users to encode input audio tracks/channels in their preferred DAW (Digital Audio Workstation) to the Mach1 Spatial format. The process is a simple signal distribution process with no other DSP related effects, allowing complete 1:1 encoding into our Vector Based Panning format—Mach1 Spatial.

Plugin Parameters

General Parameters

Input and Output Options

Stereo Parameters

M1-Panner mode for stereo input channels/tracks of audio (L & R)

Notes

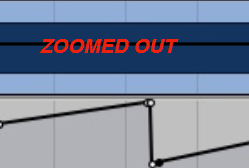

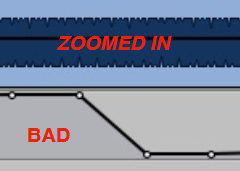

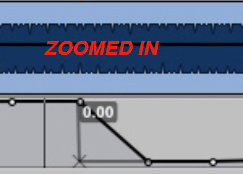

Simply un-ramp the automation to further cleanup playback of a fully rotating source:

Zoomed Out View

Bad Example

Zoomed In View

Good Example

Mach1 Monitor

Interface

Hover over different parts of the interface to learn more.

Areas with a pointing hand cursor (👆) can be clicked to navigate to their documentation.

Description

Decoding Explained

The Mach1 Monitor allows users to preview and monitor the mix as though you were head tracking in your target device/app/project. The audio outputting from the channel containing Mach1 Monitor is not intended for exporting (unless using the StereoSafe mode) and is only intended for aiding in the process of mixing and mastering your spatial audio mix. This process takes the multichannel of Mach1 Spatial and down mixes them with our Mach1 Spatial VVBP algorithm to output the correct stereo image based on your simulated orientation angles (Yaw, Pitch, Roll).

Plugin Parameters

The following explains all the current possible encoding modes of the Mach1 Monitor in your common DAW/Editor.

General Parameters

Decode Selection

The decode selection determines how your spatial mix will be monitored and affects the available panning options in connected Mach1 Panners:

Settings

Monitor Modes

Allows different decoding modes for testing your spatial mix:

Timecode Offset

The timecode offset feature helps synchronize the Mach1 Player with your DAW timeline:

- Your DAW session doesn't start at 00:00:00:00

- Your video file has a different start time than your DAW session

- You need to compensate for any system latency

- Enter the timecode where your video should start in your DAW

- The Mach1 Player will automatically adjust its playback to match this position

- The offset remains active until changed, ensuring consistent sync between your DAW and video

Mach1 Orientation Manager

Description

The Mach1 Orientation Manager is an external orientation device manager and utility designed for aggregating different headtracking methods. It functions as a central service that connects, manages, and routes orientation data from various devices to any app that has the client integration such as some of the Mach1 Spatial System plugins and apps. By supporting multiple hardware connections and data transmission protocols, it enables seamless integration of orientation tracking in spatial audio workflows.

This component is also designed so that anyone can make a PR to add support for a new device or protocol via the github repo:

- Codebase

- Issue Board

- Background Service: Runs as a system service or LaunchAgent

- OSC Client: UI application for monitoring and configuration

- Mach1 Monitor: For real-time spatial audio monitoring

- Mach1 Player: For video monitoring with head tracking

Interface

Hover over different parts of the interface to learn more.

Areas with a pointing hand cursor (👆) can be clicked to navigate to their documentation.

Parameters

The Mach1 Orientation Manager OSC Client interface contains several key components for controlling and monitoring orientation data:

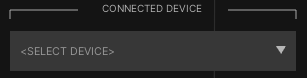

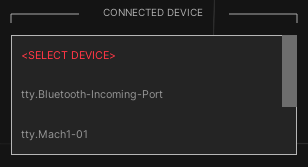

Initial client state before selecting any device

The menu will display all currently detected compatible devices. If your device isn't listed, check its connection and ensure drivers are properly installed.

- Yaw: Horizontal rotation (left/right)

- Pitch: Vertical rotation (up/down)

- Roll: Lateral rotation (tilt)

This section displays the current orientation values being received from the connected device and sent to other applications. The data is processed by the background service and made available through this client interface.

Each of these values are also buttons to invert (-) the polarity of each axis depending on how the device is mounted or being used.

- Sets the current device position as the new zero point

- Helps eliminate drift in orientation tracking

- Essential for accurate spatial audio monitoring

To ensure accurate spatial orientation, press this button when you are in your desired reference position. This calibrates the system to use your current orientation as the neutral starting point.

- Properly closes the connection to the selected device

- Stops the data transmission to the OSC port

Device Specific Parameters

OSC

- Set the port number for receiving OSC data

- Must match the outgoing port on any sending application

The Orientation Manager uses OSC (Open Sound Control) protocol to send orientation data to other applications. This setting allows you to specify which port should receive the orientation data.

Superware IMU

Hardware

Device selection options in the Orientation Manager

- Serial: Using RS232 protocol for wired connections

- BLE: Bluetooth Low Energy for wireless devices

- OSC: Open Sound Control for network-based data

- Camera: Vision-based tracking (work in progress)

- Emulator: For testing and debugging

- Supperware IMU: High-precision inertial measurement units

- MetaWear/mBientLab IMUs: Bluetooth-enabled sensors

- Waves Nx Tracker: Headphone-mounted motion tracker

- M1 IMU: Mach1's proprietary orientation sensor

- WitMotion IMUs: Cost-effective motion sensors (in development)

- Custom OSC Input: Any device or app that sends OSC-formatted orientation data

Mach1 Player

The Mach1 Player is designed for playback of spatial audio content created with the Mach1 Spatial System.

Overview

Mach1 Player provides a dedicated playback environment for spatial audio content, allowing real-time head tracking and orientation control for accurate spatial audio monitoring.

Playback Options

- Toggle between flat and spherical 360° mode with the 'Z' key

- Enable reference angle overlay with the 'O' key

- Switch between stereoscopic and monoscopic videos with the 'D' key

- View player statistics by holding the 'H' key

Getting Started

Launch Mach1 Player and use the drag-and-drop interface to load your 360° video content. The player will automatically connect to Mach1 Monitor to provide orientation data and synchronize with your DAW timeline.

Mach1 Transcoder

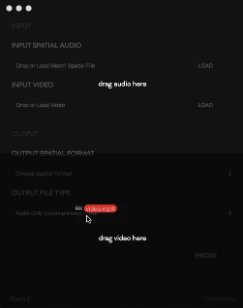

Interface

Hover over different parts of the interface to learn more.

Areas with a pointing hand cursor (👆) can be clicked to navigate to their documentation.

Description & User Story

Mach1 Spatial Format Explained

Mach1 Spatial is a VVBP (Virtual Vector Based Panning) or SPS spatial audio format that encourages users to have complete freedom with their post production mixing process by not forcing the user to use any sonic signal altering processing. The user is free to apply their own DSP/ASP to their audio during the mixing process to avoid proprietary DSP algorithms for spatial audio seen in other formats or tools. With this in mind the user is completely in control of their creative mix process and can make any decision they want whether it results in realistic spatial fields or more creative spatial sound fields.

Transcoder Explained

Mach1 Spatial as a virtual vector based format has the advantage of not using any signal processing effects for pre-rendering or playing back spatial audio. Due to this major advantage; the user is able to leverage Mach1 Spatial format as a master spatial audio format and use the Transcoder to down mix to all other spatial or surround formats without introducing any mix altering effects, guaranteeing the most 1:1 transcoding possible.

Format Conversion (m1-transcode)

The format conversion math uses basic coefficient changes to channels of audio with only the process effecting: re-ordering channels to the correct output, correctly distributing audio data to the correct output channels. There are no other effects applied to the master mix.

For more information: https://dev.mach1.tech/#mach1transcode-api

Headlocked/Static Stereo

Use the Input Static Stereo Mix field to correctly mux master headlocked stereo audio, ideal for adding music or some VO elements (as well as experimental elements) to your spatial mix. These channels will remain intact until the decoding stages, at which point the target app will sum these channels to the output decoded stereo from the spatial mix (if your target app supports this).

Transcoding Features

Input Options

_000 (front)

_090 (right)

_180 (back)

_270 (left)

The M1-Transcoder app will only display the available/relevant transcoding options for the Mach1 Horizon Pairs input type.

Supports mp4 & mov video files, all conversions/encoding/transcodings use pass through video so that nothing is re-encoded or effecting the video streams of files.

Output Types

- Mach1 Spatial (Audio & Video)

- Mach1 Horizon (Audio & Video)

- Mach1 Horizon Pairs (Single Stream) (Audio & Video)

- Mach1 Horizon Pairs / Quad-Binaural (Multiple Streams) (Audio & Video)

- First Order Ambisonic ACNSN3D [Youtube] (Audio & Video) [Applies metadata for direct upload to Youtube depending on selected File Type]

- First Order Ambisonic FuMa (Audio & Video)

- Second Order Ambisonic ACNSN3D (Audio Only)

- Second Order Ambisonic FuMa (Audio Only)

- Third Order Ambisonic ACNSN3D (Audio Only)

- Third Order Ambisonic FuMa (Audio Only)

- 5.0 Surround (L,C,R,Ls,Rs) (Audio & Video)

- 5.1 Surround (L,C,R,Ls,Rs,LFE) (Audio & Video)

- 5.1 Surround SMPTE (L,R,C,LFE,Ls,Rs) (Audio & Video)

- 5.1 Surround DTS (L,R,Ls,Rs,C,LFE) (Audio & Video)

- 5.0.2 Surround (L,C,R,Ls,Rs,Lts,Rts) (Audio Only)

- 5.1.2 Surround (L,C,R,Ls,Rs,LFE,Lts,Rts) (Audio Only)

- 5.0.4 Surround (L,C,R,Ls,Rs,FLts,FRts,BLts,BRts) (Audio Only)

- 5.1.4 Surround (L,C,R,Ls,Rs,LFE,FLts,FRts,BLts,BRts) (Audio Only)

- 7.0 Surround (L,C,R,Lss,Rss,Lsr,Rsr) (Audio & Video)

- 7.1 Surround (L,C,R,Lss,Rss,Lsr,Rsr,LFE) (Audio & Video)

- 7.1 Surround SDDS (L,Lc,C,Rc,R,Ls,Rs,LFE) (Audio & Video)

- 7.1.2 Surround (L,C,R,Lss,Rss,Lsr,Rsr,LFE,Lts,Rts) (Audio Only)

- 7.1.4 Surround (L,C,R,Lss,Rss,Lsr,Rsr,LFE,FLts,FRts,BLts,BRts) (Audio Only)

- FB360/TBE (8ch) (Audio Only)

This list is maintained here: https://dev.mach1.tech/#formats-supported

The output options are dependent on what is possible per selected format as the list above shows.

Additional Features

Audio File Loading

Video File Loading

These features are available after a successful run of the M1-Transcoder.